Understanding LangChain 0.1.0: A Comprehensive Overview

Written on

Chapter 1: Introduction to LangChain 0.1.0

Greetings, everyone! In this article, we will delve into LangChain 0.1.0. Over the last year, LangChain has experienced significant advancements. The primary aim of version 0.1 has been to enhance its stability and prepare it for production use. This involved a redesign to boost versioning and overall reliability. The team has actively consulted with the community to identify the most valued features of LangChain. In this discussion, I will spotlight their endeavors in these key areas.

Each segment of this article will cover topics such as integrations, observability, streaming, composability, output parsing, retrieval, and agents, all supported by practical code demonstrations to enrich your learning experience. So, let’s embark on this journey together!

If you find this topic intriguing and wish to support my work, please give my article a clap! Your encouragement is greatly appreciated. Don’t forget to follow me on Medium and subscribe to stay updated with my latest articles. What topics would you like to see me cover next?

Section 1.1: The Importance of Observability

Observability is vital when developing intricate applications using language models. Whether you are dealing with lengthy chains or interactive functions, understanding the internal workings of your application is crucial for enhancements. In LangChain, we have prioritized providing exceptional observability. This feature allows you to view the inputs and outputs of the language model as well as every intermediate step.

Let’s look at some of the observability options available:

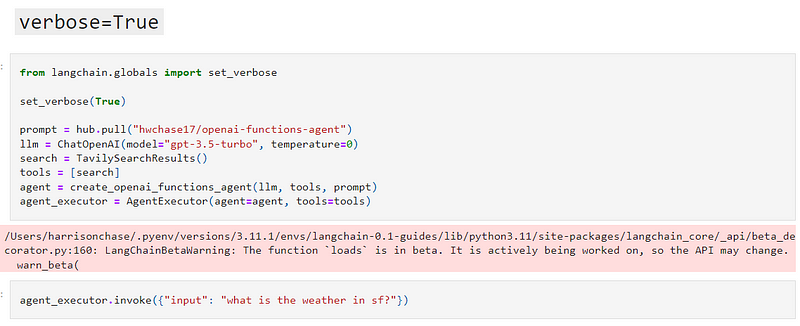

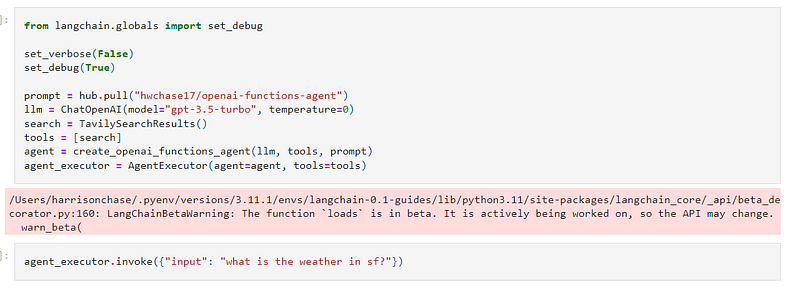

Verbose Mode: One of the initial features of LangChain, this mode displays the main processes occurring in your application, giving a concise overview without overwhelming details.

Debug Mode: For those needing detailed information, Debug Mode logs comprehensive data about each step, including the exact prompts used, responses received, and token counts. This mode is particularly beneficial for complex applications requiring in-depth analysis.

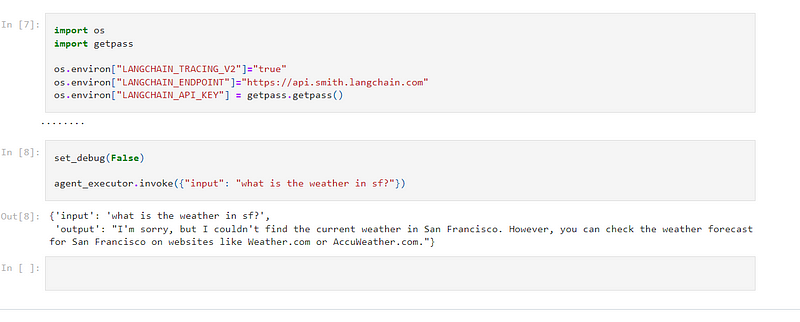

LangSmith Tool: For a more sophisticated and user-friendly method of monitoring your application’s activity, we have introduced LangSmith. This tool allows for effective logging and data analysis, providing insights into crucial events, inputs, and outputs through a more intuitive interface.

LangChain’s observability features are crafted to give you a comprehensive understanding of your application, which is essential for developing effective language model applications.

Section 1.2: Exploring Integrations

Now, let’s examine the broad spectrum of integrations LangChain offers and how they simplify the creation of complex language applications.

Integrations Overview: LangChain organizes its integrations in two main categories: by providers and by components. This structure enables users to quickly locate what they require, whether for specific provider tools or component-based solutions.

Provider-Based Integrations: For instance, when considering OpenAI, LangChain integrates with a range of tools, including language models and document loaders. If you have access to OpenAI’s services, integrating them into your LangChain projects is straightforward.

Component-Based Integrations: LangChain also classifies integrations based on components such as language models, chat models, and document loaders. Each component is accompanied by detailed documentation and examples, facilitating a smooth start.

Unique Features: What distinguishes LangChain are its specialized toolkits and the capability to manage various document types. It’s not solely about accessing language models; it’s also about efficiently transforming and storing data.

Community Contributions: The LangChain community continuously introduces new integrations, with a dedicated page on langchain.com showcasing trending integrations, allowing users to share their favorites.

Updates and Improvements: Recently, LangChain has restructured its integration process. Integrations are now kept separate from the core package, enhancing the stability of the main code and improving version control, making LangChain more robust and ready for production.

Chapter 2: The Composability of LangChain

What is Composability? The intent behind enhancing LangChain’s composability is simple: it allows for easy modifications and customizations of chains. Users often need to adjust not only prompts but also data processing and operation sequences. Composability simplifies these tasks.

Introducing LangChain Expression Language: This new orchestration framework is designed to streamline and improve the creation and modification of language model applications, offering numerous advantages over traditional coding methods.

Key Features of LangChain Expression Language:

- Streaming Support: Essential for real-time processing in language model applications.

- Async and Batch Support: Enables applications to operate in various modes without the need for code rewrites.

- Parallel Execution: Accelerates processes by managing multiple operations simultaneously.

- Retries and Fallbacks: Increases resilience against failures and unexpected outputs.

- Access to Intermediate Results: Facilitates straightforward debugging and user interaction.

- Observability: Automatically logs all steps for effortless tracking and analysis.

Ease of Use: Designed for user-friendliness, LangChain Expression Language offers a unified interface for all objects, ensuring seamless interactions. Additionally, many guides and resources are available for newcomers.

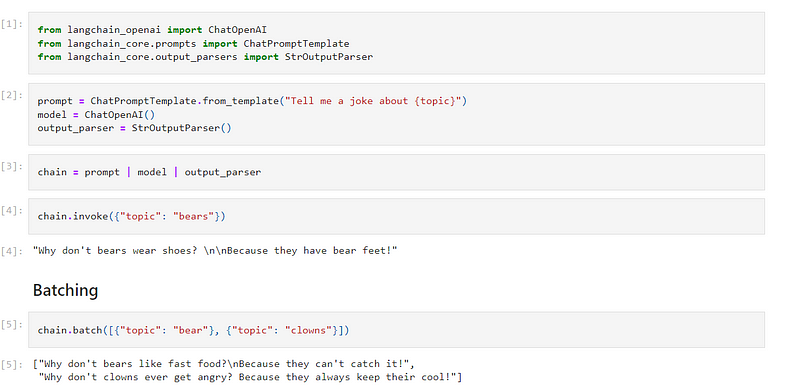

Practical Applications: Let’s go through a simple example utilizing LangChain Expression Language. Imagine crafting a chain that includes a language model and an output parser. With just a few lines of code, invoking this chain yields immediate results.

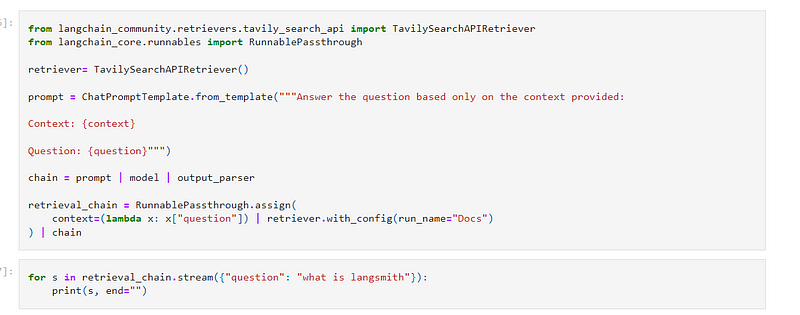

Advanced Applications: LangChain Expression Language also simplifies more intricate tasks, such as retrieval-augmented generation (RAG). You can sequentially add information to a dictionary and pass it through various steps to enhance the final outcome.

Understanding Streaming in AI

In the context of generative AI, streaming refers to delivering responses from AI models in real time as they are generated. This is crucial since responses from language models may take time, and streaming ensures users are aware that the system is actively processing.

Why is Streaming Important?

- User Experience: Engages users by displaying real-time progress.

- Complex Operations: Essential for applications that involve multiple AI calls, such as chains or agents, where each step may require time.

LangChain 0.1.0 and Streaming: Version 0.1 has been developed with a strong focus on streaming capabilities. Thanks to the LangChain Expression Language, the objects designed for AI applications now support various streaming methods.

Key Streaming Methods in LangChain:

- Stream Method: Provides tokens as they are produced.

- Async Stream Method: For use in asynchronous environments.

- Intermediate Step Streaming: Useful for complex chains to display intermediate processes.

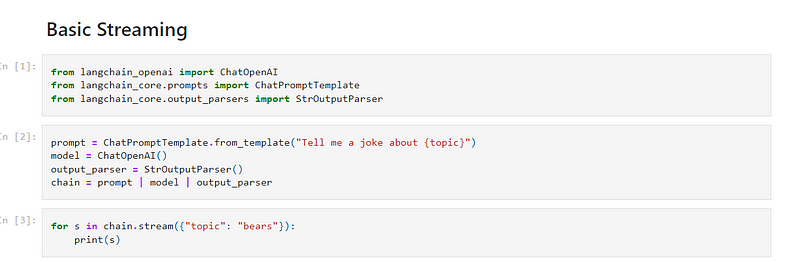

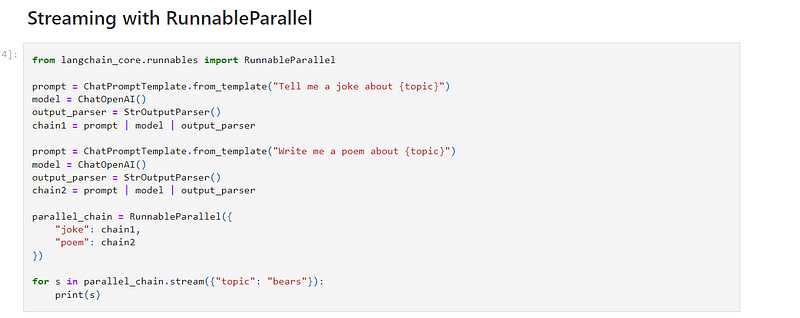

Demonstration with a Notebook:

- Basic Streaming: We create a simple chain (prompt, model, output parser) to demonstrate real-time streaming of responses.

- Parallel Streaming: Showcasing streaming with parallel chains, such as telling a joke and writing a poem simultaneously, illustrates how responses from various tasks interweave in real time.

- Stream Log Method: Useful for revealing intermediate steps in complex processes, particularly in retrieval-augmented generation (RAG). This allows users to view the documents retrieved as part of the AI’s decision-making process.

Streaming with Agents: In LangChain, agents can execute multiple actions, and it’s essential to stream these actions to the user. The agent executor streams the actions taken, not just the final tokens, providing a clearer perspective of the process.

Practical Example with Agents: We will demonstrate an agent performing a weather query. Streaming reveals each action taken by the agent, such as retrieving weather data for different cities and the corresponding responses from the language model.

Closing Thoughts:

Streaming in LangChain adds a dynamic and interactive aspect to AI applications, significantly enhancing the user experience. Whether dealing with a simple query or a complex series of actions, streaming ensures clarity and engagement.

The complete article can be found here.

The first video titled "LangChain Version 0.1 Explained | New Features & Changes" provides an overview of the latest updates and enhancements in LangChain 0.1.

The second video titled "Create a RAG Chain using LangChain 0.1 (New version)" demonstrates how to create a retrieval-augmented generation chain using the new features in LangChain 0.1.

I am an AI application expert! If you're interested in collaborating on a project, feel free to inquire here or book a one-on-one consulting session with me.

Thank you for being part of the In Plain English community! Before you leave, be sure to clap and follow the writer. Follow us on X, LinkedIn, YouTube, Discord, and subscribe to our newsletter for more insights. Explore our other platforms for additional content.