Understanding Multi-Layer Perceptrons: A Beginner's Guide

Written on

Chapter 1: Introduction to Neural Networks

Imagine this scenario: you're organizing your digital files into folders again. You meticulously move each file, only to realize hours have passed. Many of us can relate to this experience, but it doesn't have to be tedious. What if there was a smart program capable of sorting these files on your behalf, with remarkable precision? Allow me to introduce the neural network!

A neural network is a sophisticated computer program designed to identify patterns within data sets, enabling it to perform tasks far more swiftly than typical software. It learns to anticipate outcomes for specific data by analyzing thousands of examples provided to it.

In essence, a Multi-Layer Perceptron (MLP) is a specific type of neural network made up of several interconnected perceptrons, which are mathematical functions. Each perceptron is linked to others, with varying connection strengths, or weights, that influence the network's output. You may recognize MLPs as feedforward artificial neural networks.

Neural Networks Explained

Neural networks represent a branch of artificial intelligence (AI). AI is ubiquitous in our daily lives — from voice assistants like Amazon Alexa to self-driving vehicles by Waymo, online shopping recommendations, and even drug discovery. These networks leverage mathematical algorithms to detect patterns within data, using those patterns to make predictions about new data. They attempt to replicate the workings of the human brain, consisting of neurons — each neuron is a mathematical equation that processes inputs, applies weights, and transmits the output to subsequent neurons.

You might find the terminology overwhelming at first, but it’s crucial to grasp the fundamentals of human brain functionality, as AI developers base neural networks on this model. The human brain consists of approximately 86 billion neurons that communicate through electrical signals when stimulated. Different brain regions handle various functions, such as emotions, reasoning, and memory, all composed of these neurons.

Chapter 2: The Functionality of Multi-Layer Perceptrons

An artificial neural network seeks to imitate the brain's processes, though in a much simpler form. Given the brain's complexity, programmers reduce its thought processes to a set of mathematical equations that data can traverse to yield results.

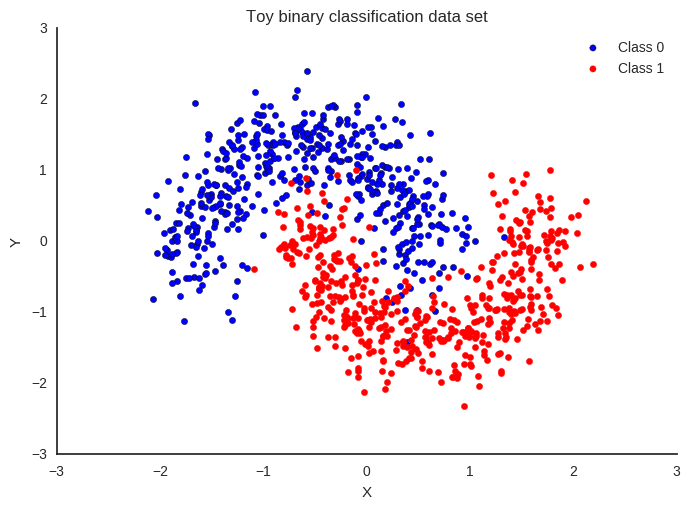

MLPs are the fundamental building blocks of artificial neural networks, utilizing a series of perceptrons to convert multiple inputs into a single output ranging from 0 to 1. This output is subsequently processed through additional layers of perceptrons, continuing until a final output is achieved. An MLP typically consists of three layers: an input layer, at least one hidden layer, and an output layer. The hidden layer is particularly useful for distinguishing non-linear datasets, as illustrated below:

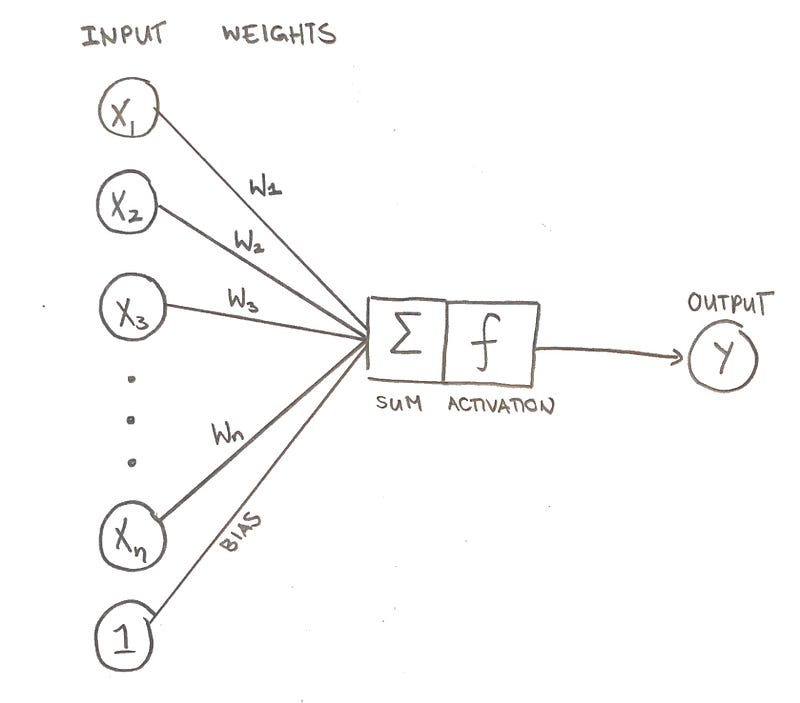

The significance of weights within an MLP cannot be overstated. Each input traverses through layers, where it is multiplied by various weights, indicating the strength of connections between data points.

Consider weights as familial relationships; your brother is closer to you than your distant cousin. Similarly, a higher weight denotes a closer connection, while a lower weight signifies a more distant relation.

Another essential element is the bias, which offsets the final outcome of the equations. Depending on the scenario, this bias may be necessary to achieve the desired output.

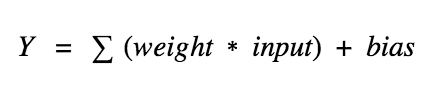

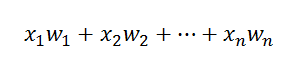

The output of each neuron is calculated by determining the weighted sum of its inputs, followed by adding the bias.

For instance, if all inputs are 1 and their corresponding weights are also 1, the calculation unfolds as follows:

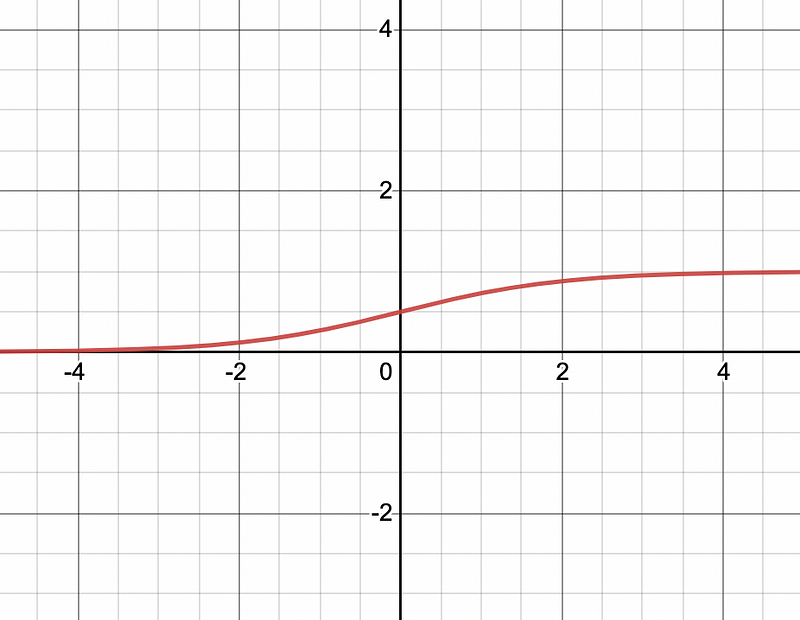

Afterward, the value is processed through the activation function, which normalizes the inputs into a range between 0 and 1 for the computer to interpret. Various activation functions exist, with the sigmoid function often being the preferred choice due to its smooth transition.

If you seek further understanding of other activation functions, feel free to explore additional resources.

MLPs — A System of Interconnected Perceptrons

The complexity of a neural network can vary greatly depending on the number of neurons utilized. An MLP's structure ensures that the output from one perceptron becomes an input for the next layer, progressively refining the output possibilities.

Training an MLP

It's essential to note that a neural network won't perform optimally from the outset. Initially, weights are assigned randomly, leading to subpar results. To enhance accuracy, the network undergoes training with a series of labeled practice inputs, known as supervised learning. If the output is incorrect, weights and biases are adjusted accordingly. This iterative process continues until the MLP accurately classifies the training data.

Think of this like preparing for a math exam. Your teacher provides practice questions to help you master the material. When the actual test arrives, you apply your learned skills to new questions.

In this analogy, training data represents practice questions, while test data is akin to the final exam.

Why Does This Matter?

MLPs offer an efficient means of automating data categorization. After training, a neural network can classify data much quicker than manual methods. MLPs are prevalent in various applications, from image recognition and data categorization to personalized online shopping suggestions.

MLPs play a significant role across diverse fields, and now you have a deeper understanding of their importance!

The Minimum Lovable Product: Understanding its Definition and Benefits

This video dives into the concept of a Minimum Lovable Product (MLP) and explains its significance in product development.

Investing in MLPs: A Comprehensive Overview

This video discusses whether investing in Master Limited Partnerships (MLPs) is a sound financial decision and explores the potential benefits and risks.